🔒 Safety-certifiable Multi-Sensor Fusion for Robotic Navigation in Urban Scenes

The visual/LiDAR SLAM methods are challenged in complex urban scenarios, especially when safety certification is required for autonomous systems. In this project, we aim to study the mechanism of the impacts caused by dynamic scenarios on the visual/LiDAR SLAM methods, and develop safety-certifiable navigation algorithms that can quantify and guarantee the reliability of localization results. We try to answer the questions of how dynamic objects affect the state estimation of visual/LiDAR SLAM methods, how to improve robustness, and how to provide safety-quantifiable localization for robotics in complex urban environments.

Recent News

- The manuscript entitled "Safety-quantifiable Line Feature-based Monocular Visual Localization with 3D Prior Map" is accepted in the IEEE Transactions on Intelligent Transportation Systems. The video is available on YouTube and Bilibili.

- September 2022, 1 paper accepted in IET Intelligent Transport Systems.

- Zhong, Y., Huang, F., Zhang, J., Wen, W., Hsu, L.-T.: Low-cost solid-state LiDAR/inertial-based localization with prior map for autonomous systems in urban scenarios. IET Intell. Transp. Syst., 2022.

- Aug 2022, 1 paper on LiDAR SLAM accepted in NAVIGATION: Journal of the Institute of Navigation.

- Wen, W., & Hsu, L.T. (2022). AGPC-SLAM: Absolute Ground Plane Constrained 3D Lidar SLAM. NAVIGATION, 69(3).

Video Demonstration

Related Papers (*: Corresponding author)

2025

-

POPL-SLAM: A Pose-Only Representation-Based Visual-Inertial SLAM With Point and Structural Line Features.

IEEE Transactions on Aerospace and Electronic Systems, 62, 1509-1525, 2025. -

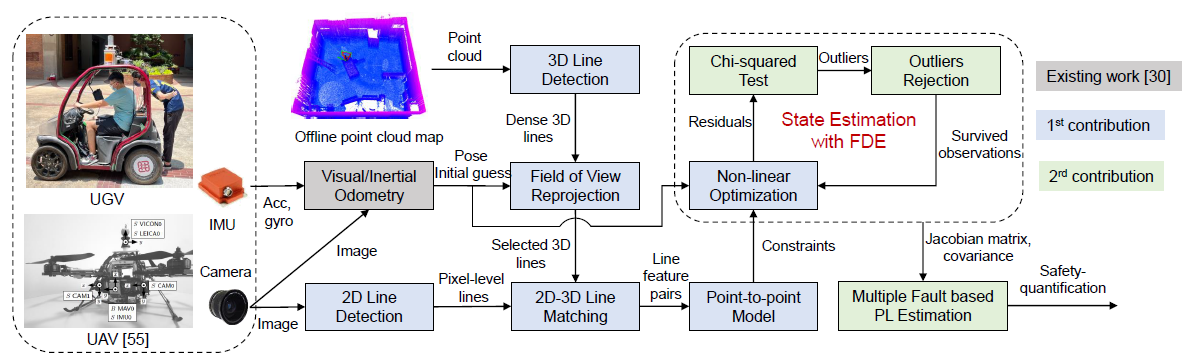

Safety-quantifiable Line Feature-based Monocular Visual Localization with 3D Prior Map.

IEEE Transactions on Intelligent Transportation Systems, 2025. -

Fault Detection Algorithm for Gaussian Mixture Noises: An Application in Lidar/IMU Integrated Localization Systems.

NAVIGATION: Journal of the Institute of Navigation, 72(1), 2025. -

Multi-Sensor Plug-and-Play Navigation Based on Resilient Information Filter.

IEEE Sensors Journal, 2025. -

A Novel Lie Group-based Reliable IMM Estimation Method for SINS/GNSS/OD/NHC Integrated Navigation in Complex Environments.

IEEE Internet of Things Journal, 2025. -

Graph-Based Indoor 3D Pedestrian Location Tracking With Inertial-Only Perception.

IEEE Transactions on Mobile Computing, 2025.

2024

-

Safety-Quantifiable Planar-Feature-based LiDAR Localization with a Prior Map for Intelligent Vehicles in Urban Scenarios.

IEEE Transactions on Intelligent Vehicles, 2024. -

Factor Graph Optimization-Based Smartphone IMU-Only Indoor SLAM With Multi-Hypothesis Turning Behavior Loop Closures.

IEEE Transactions on Aerospace and Electronic Systems, 2024. -

Tightly-coupled Visual/Inertial/Map Integration with Observability Analysis for Reliable Localization of Intelligent Vehicles.

IEEE Transactions on Intelligent Vehicles, 2024. -

Integrity-Constrained Factor Graph Optimization for GNSS Positioning in Urban Canyons.

NAVIGATION: Journal of the Institute of Navigation, 2024. -

FGO-MFI: Factor Graph Optimization-based Multi-sensor Fusion and Integration for Reliable Localization.

Measurement Science and Technology, 35(8), 086303, 2024.

2023

-

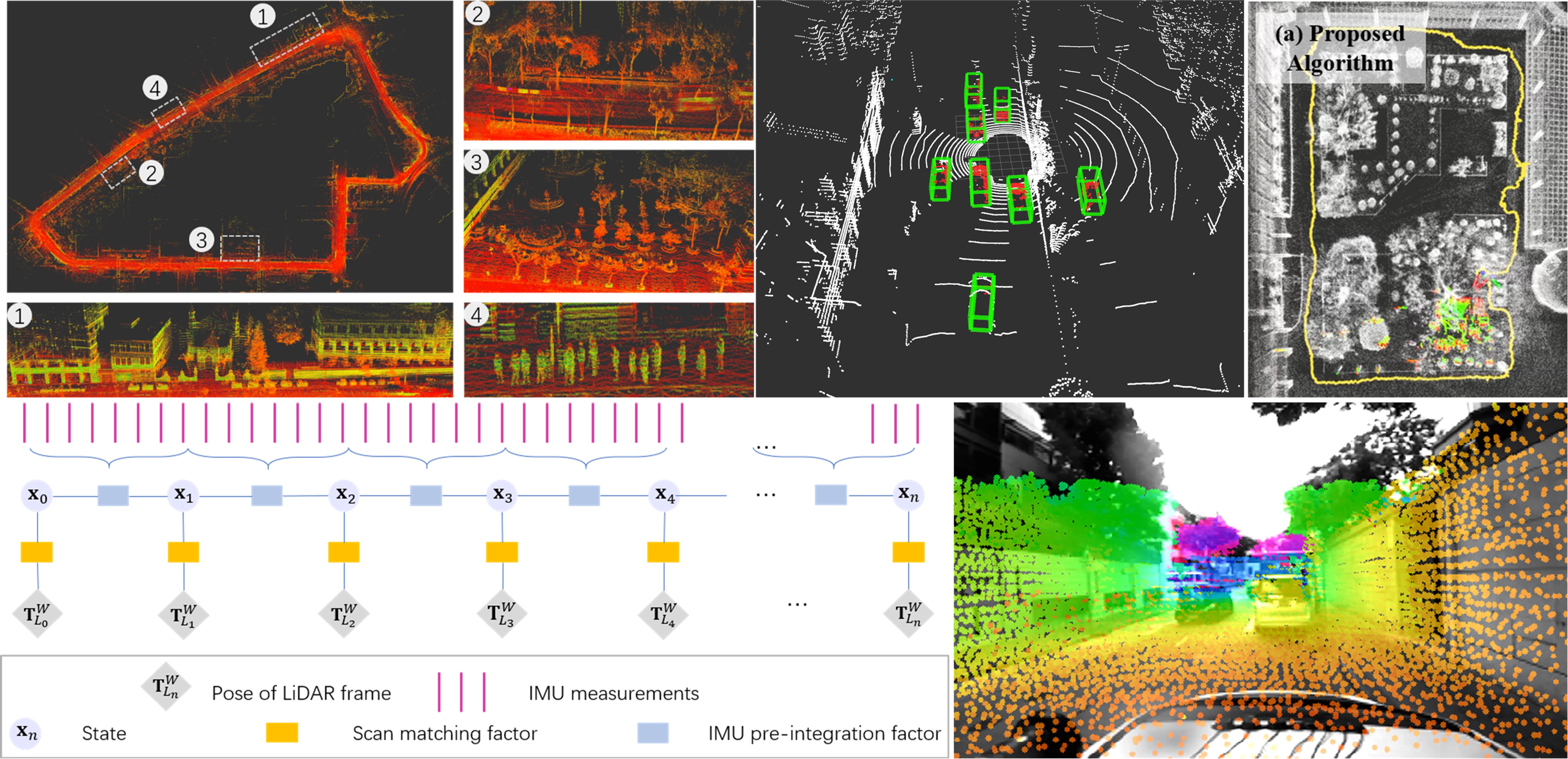

GLIO: Tightly-coupled GNSS/LiDAR/IMU Integration for Continuous and Drift-free State Estimation of Intelligent Vehicles in Urban Areas.

IEEE Transactions on Intelligent Vehicles, 2023. -

Low-cost Solid-state LiDAR/Inertial Based Localization with Prior Map for Autonomous Systems in Urban Scenarios.

IET Intelligent Transport Systems, 17(3), 474-486, 2023.

2018–2022

-

AGPC-SLAM: Absolute Ground Plane Constrained 3D Lidar SLAM.

NAVIGATION: Journal of the Institute of Navigation, 69(3), 2022. -

Coarse-to-Fine Loosely-Coupled LiDAR-Inertial Odometry for Urban Positioning and Mapping.

Remote Sensing, 13, 2371, 2021. -

Point Wise or Feature Wise? A Benchmark Comparison of Publicly Available Lidar Odometry Algorithms in Urban Canyons.

IEEE Intelligent Transportation Systems Magazine, 2021. -

Robust Visual-Inertial Integrated Navigation System Aided by Online Sensor Model Adaption for Autonomous Ground Vehicles in Urban Areas.

Remote Sensing, 12(10), 1686, 2020. -

Performance Analysis of NDT-based Graph SLAM for Autonomous Vehicle in Diverse Typical Driving Scenarios of Hong Kong.

Sensors, 18, 3928, 2018.

Acknowledgement and Collaborators

This research is supported by government and industry partners, including Hong Kong Polytechnic University, Guangdong Basic and Applied Basic Research Foundation, and Huawei Technologies. We collaborate with leading research groups in multi-sensor fusion and safety-certifiable navigation.

Projects (3)

Safety-certified Multi-source Fusion Positioning for Autonomous Vehicles in Complex Scenarios

February 16, 2025

Innovation and Technology Commission

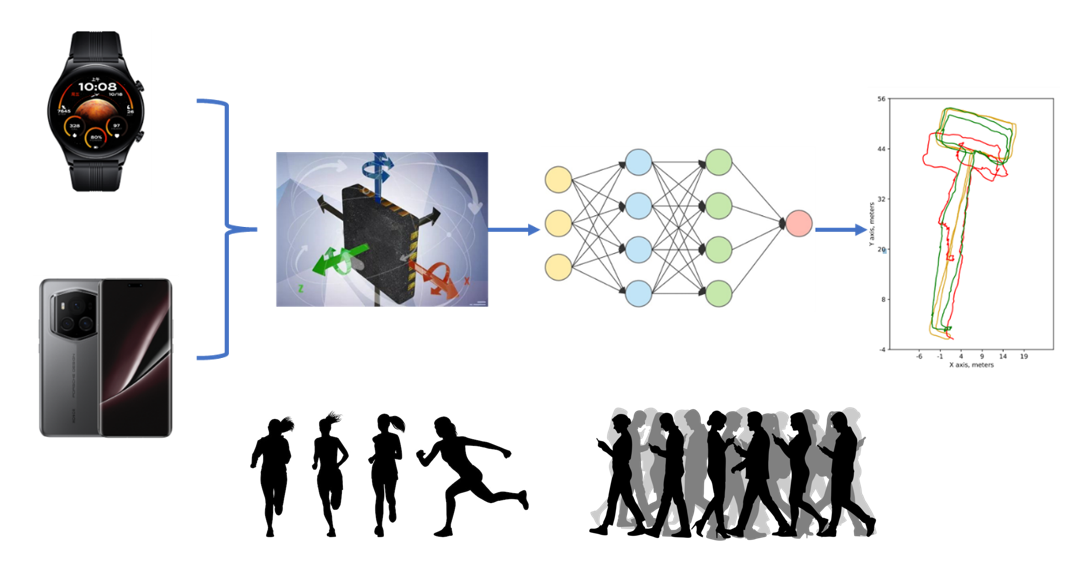

AI assisted inertial navigation system

October 14, 2024

This project aims to develop a deep learning-based inertial navigation algorithm that utilizes accelerometer, gyroscope, and magnetometer data from smart wearables and smartphones to infer the user’s position and movement trajectory, while providing corresponding confidence levels